OpenAI Native Image Generation - Generative Reality, Generative UI, and more

A short post on native image generation in LLMs, OpenAI’s GPT-4o release and Studio Ghibli trend, and applications of native image generation like “Generative UI” and “Generative Reality”.

OpenAI Native Image Generation

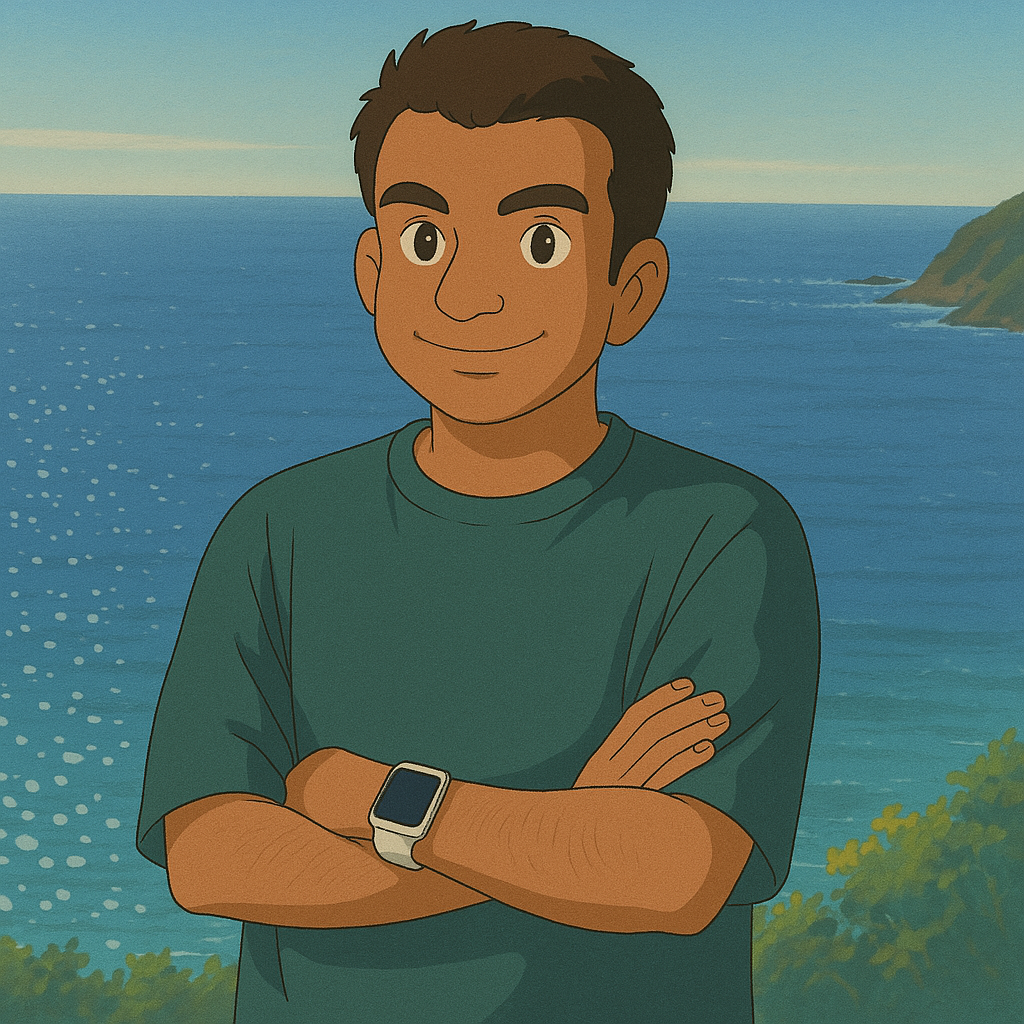

OpenAI announced native image generation in GPT-4o on March 25th, 2025. Immediately after the announcement, Machine Learning TPOT (This Part of Twitter) was flooded with tweets the model’s ability to generate a version of the provided image in the style of Studio Ghibli, an animation style initially popularized by Hayao Miyazaki and Isao Takahata. The “offending” tweet by @GrantSlatton is provided below (left). People were quick to jump on the bandwagon, with users posting “Ghiblifying” images of their friends, families, and iconic pictures from both pop/meme culture.

tremendous alpha right now in sending your wife photos of yall converted to studio ghibli anime pic.twitter.com/FROszdFSfN

— Grant Slatton (@GrantSlatton) March 25, 2025

Iconic movie scenes, Studio Ghibli style. This is so much fun! pic.twitter.com/LwjkNjcEV9

— Mufaddal Durbar (@MDurbar) March 26, 2025

Even though, generating ghiblified versions of images is fun, these native image generation models are capable of much more than that. They can potentially remove watermarks from images, add/remove subjects from images, help with interior design and more. What’s interesting is that despite all the hype about AI art and AI generated images, the most popular use case right now seems to be modifying existing images of friends, family, and pets in various styles. Imitation is the sincerest form of flattery, and the fact that people are using these models to add filters on top of their own photos is extremely revealing of two things:

- True creativity is still exceptionally rare and very valuable,

- Providing some notion of control over the output space rather than a blank canvas is more appealing to the average user.

This newfound capability raises a natural question: what exactly is native image generation, and how does it differ from earlier approaches? Let’s dive into the technical details.

What is Native Image Generation?

Earlier image generation models like DALL-E, CLIP, Imagen, and more relied on diffusion models, Vision Transformers, or Generative Adversarial Networks (does anyone still remember GANs?). In contrast, newer models like GPT-4o, Grok 3, and gemini-2.0-flash-exp-image-generation (yes, that’s really the name 🤦️) are truly multimodal. These models can generate images and audio in an autoregressive manner, much like they generate text. The GIF below illustrates how autoregressive text generation works in an earlier model like GPT-2. Autoregressive in the context of an LLM means that in order to generate the next token, the model conditions its prediction on the provided context (prompt and system prompt) and the previously generated tokens. For instance if the system prompt is “Answer the users question in a single sentence” and the prompt is “Recite the first law of robotics”, the following steps are taken:

- The model (GPT-2) encodes the system prompt and the user prompt and updates the state of the Key, Query, and Value vectors (attention mechanism) in the transformer model.

- The model generates the first token

"A"based on this internal state. - The model modifies the internal state with the output

"A"and then uses this modified state to predict the next token"robot". - The model continues this process until the end of the sequence is reached, which is either a maximum length or a special token like

<|endoftext|>.

As the other image on the right shows, multimodal models like GPT-4o treat text, image pixels, and audio waveforms as different tokens, and jointly train across all three modalities. During generation, predict a pixel, a word, or a waveform at a time. In a very naive sense, the model can be thought of as generating pixels from left-to-right, and top-to-bottom.

The main advantage of this approach is efficiency: we no longer need specialized systems for different modalities, streamlining both training and inference. Additionally, these models leverage cross-modal relationships, enhancing their contextual understanding of scenes.

How Does It Really Work?

Multimodal LLMs fall into two main categories:

- Models that process multiple input modalities (e.g., images, audio, text) but only generate text as output, such as LLaMA 3.2.

- Models that can also generate images or audio as output, like

GPT-4oandGrok 3.

Deeper dive into multi-modal models

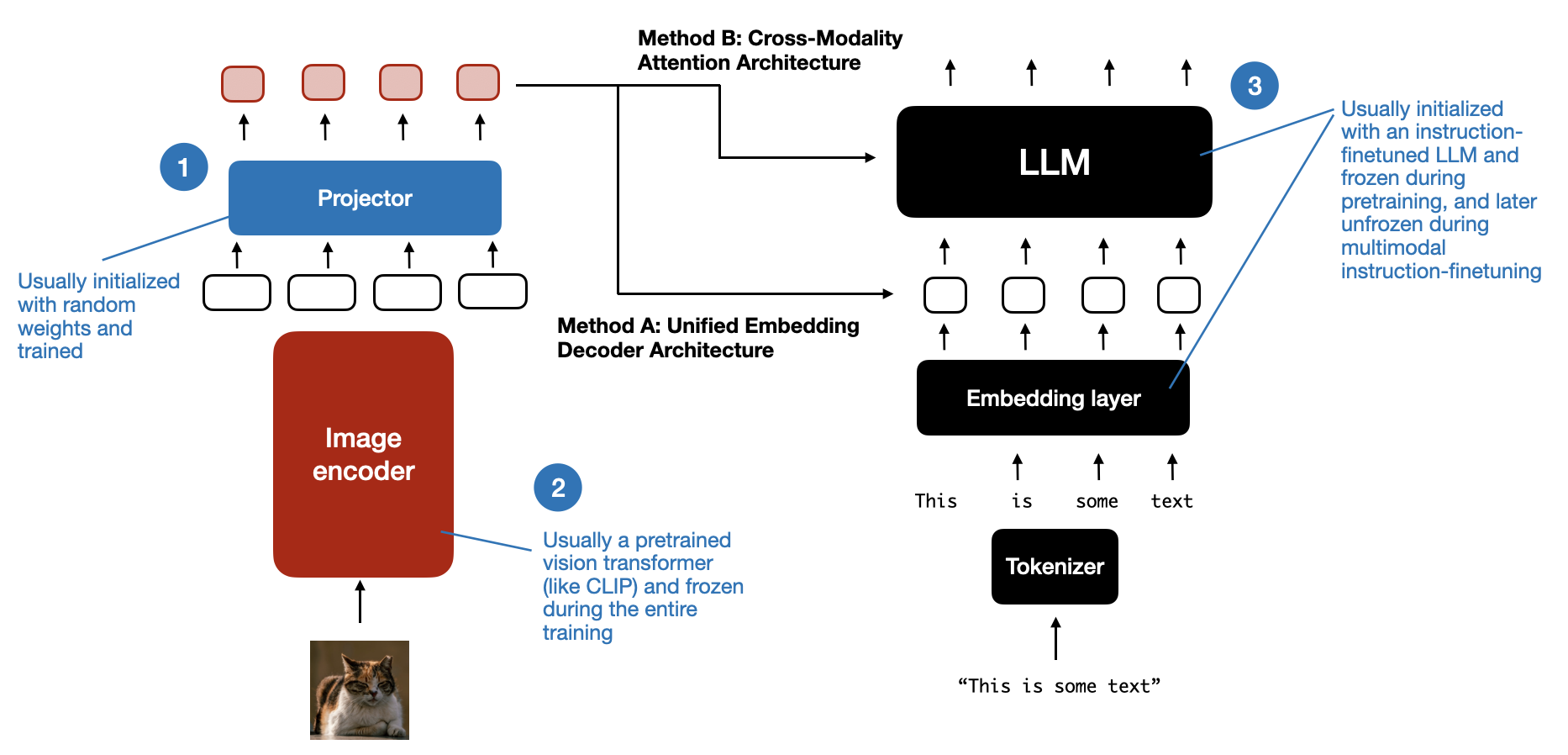

The mechanism of processing multimodal inputs is roughly similar in both these types of models but the output generation is different. The image below shows how a multi-modal LLM can process an image as well as text together to generate a response.

- These multi-modal models typically process an image into a smaller chunks of size

16 x 16or32 x 32pixels, in a left to right, top to bottom manner. - These chunks are fed in a sequential manner to another model, typically a Vision Transformer (ViT), which processes these image chunks and generates a representation for each chunk. These intermediate representations are then fed into a linear projection layer, which resizes the image representations to the same dimensionality as the input text embeddings, and also ensures that the generated image embeddings are in the same “latent space” as the text embeddings.

- This alignment is done by training the model, specifically the projection layers on a large dataset of text-image pairs, after the base LLM has finished training.

Models from different research groups use varying approaches for training, especially regarding which layers to freeze and which to update, but it common to only update the linear projection layer and image encoder during training. For instance, see this snippet from the LLama 3.2 blog post:

To add image input support, we trained a set of adapter weights that integrate the pre-trained image encoder into the pre-trained language model. The adapter consists of a series of cross-attention layers that feed image encoder representations into the language model. We trained the adapter on text-image pairs to align the image representations with the language representations. During adapter training, we also updated the parameters of the image encoder, but intentionally did not update the language-model parameters. By doing that, we keep all the text-only capabilities intact, providing developers a drop-in replacement for Llama 3.1 models.

This blog post by Sebastian Raschka provides a more technical deep dive into how multi-modal models are trained, and the state-of-the-art models in this space as of late 2024. However, the blog post does not cover the generation/decoding process for multimodal models like GPT-4o and Grok 3, which not only process multimodal inputs, but are also capable of generating images in an autoregressive manner. The concept of generating images in an autoregressive manner is not new, and has been explored as far back as the 2016s in papers like PixelRNN. PixelRNN simply used an RNN to predict the next pixel in an image, given the previous pixels.

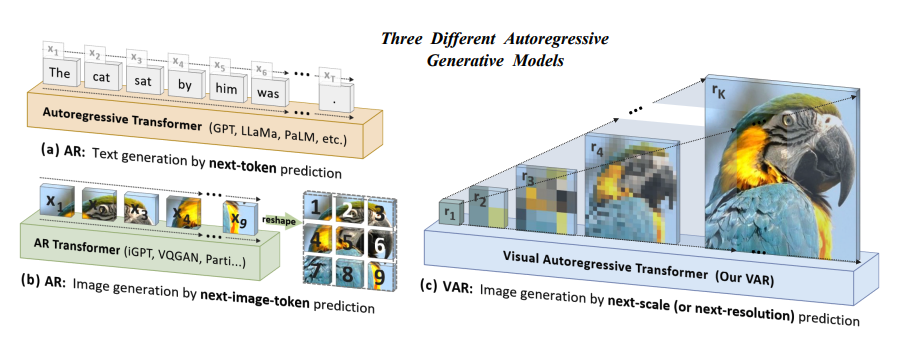

However, there have been several improvements to such models in recent years, with most of the improvements coming in the way that

- Images are encoded (CLIP/VQ-VAE) and,

- Modifying the process of autoregressive generation itself from sequential visual token generation in a raster-scan order, to more complex generation processes like Visual AutoRegressive modeling (VAR), which is autoregressive generation of images as coarse-to-fine “next-scale prediction” or “next-resolution prediction”.

Visual AutoRegressive modeling was introduced in the 2024 NeurIPS Best Paper award-winning paper “Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction”.

- The model first generates a low-resolution image starting from a \(1 \times 1\) token map, and then progressively increases the resolution by making the transformer based model to predict the higher resolution map.

- Each higher resolution map is conditioned on the previous lower resolution map, thus making the generation process autoregressive.

- Importantly, the authors show empirical validation of te scaling laws and zero-shot generalization potential of the VAR model, which is markedly similar to those of other LLMs.

Drama behind this Best Paper

+The lead author of this paper, a ByteDance intern at that time, was accused of sabotaging other researchers training jobs within ByteDance by modifying the PyTorch source code. More details are available in this reddit post but I haven't verified the veracity of these claims.

The diagram above shows the process of VAR generation as described in the paper. This is not the only way to generate images in an autoregressive manner, and it isn’t clear if GPT-4o or other native image generation models like Gemini 2.0 Pro Experimental and Grok 3 use similar techniques. There have been some speculations that the GPT-4o model performs autoregressive generation of images in a raster-scan order (left to right, top to bottom), with generation happening at all scales simultaneously. Another speculation is that there are two separate models, GPT-4o generates tokens in the image latent space, and a separate diffusion based decoder generates the actual image - see tweets below. Given the artifacts being generated in the ChatGPT UI like top-down generation, blurry intermediates, etc., I believe the latter speculation is more likely.

Okay my working hypothesis for 4o image generation is that it is jointly performing autoregressive inference (raster scanline order) on an image pyramid at all scales simultaneously. https://t.co/WMoidIuvN3

— Jon Barron (@jon_barron) March 27, 2025

How would gpt-4o image generation work? Speculation:

— Sangyun Lee (@sang_yun_lee) March 28, 2025

- gpt-4o generates visual tokens, and the diffusion decoder decodes them to pixel space.

- Not just diffusion but Rolling Diffusion-like group-wise diffusion decoder, top->bottom ordering. pic.twitter.com/4uIv8m7aIq

Is any of this new?

The resounding answer is no.

In fact, some of the style transfer results shown earlier are reminiscent of the results from DeepDream and Neural Style Transfer work by Gatys et al. in their paper “Image Style Transfer Using Convolutional Neural Networks” in 2016. The main caveat being that the results weren’t as impressive, and they used completely different architectures and training methods to achieve the results. Several other models like Google’s Gemini are multi-modal as well and support similar image generation capabilities. Here’s the Gemini announcement for instance:

Gemini 2.0 Flash debuts native image gen! Create contextually relevant images, edit conversationally, and generate long text in images. All totally optimized for chat iteration.

— Oriol Vinyals (@OriolVinyalsML) March 12, 2025

Try it in AI Studio or Gemini API. Blog: https://t.co/pkeRzaD8b5 pic.twitter.com/c7QUzNfC4k

However, none of them managed to capture the public imagination like this release from OpenAI. The main reason for the relative lack of fanfare regarding the Gemini announcement was two-fold:

- The

Geminireleases were not product centric. TheGeminiimage generation model is only available as a preview model in Google AI Studio, and viaGeminiAPI, and not in the main Gemini web interface.GPT-4o, on the other hand is available for users in the main ChatGPT website, immediately after the announcement. This is where network effects of over 400 million Weekly Active Users (WAUs) of ChatGPT come into play. - The other harsh truth is that the image outputs of the

Geminimodel are simply not as good as theGPT-4omodel. The results of both the Gemini model and theGPT-4omodel on the same prompts are shown later here.

Putting OpenAI’s image generation to the test

Are the results worth the hype?

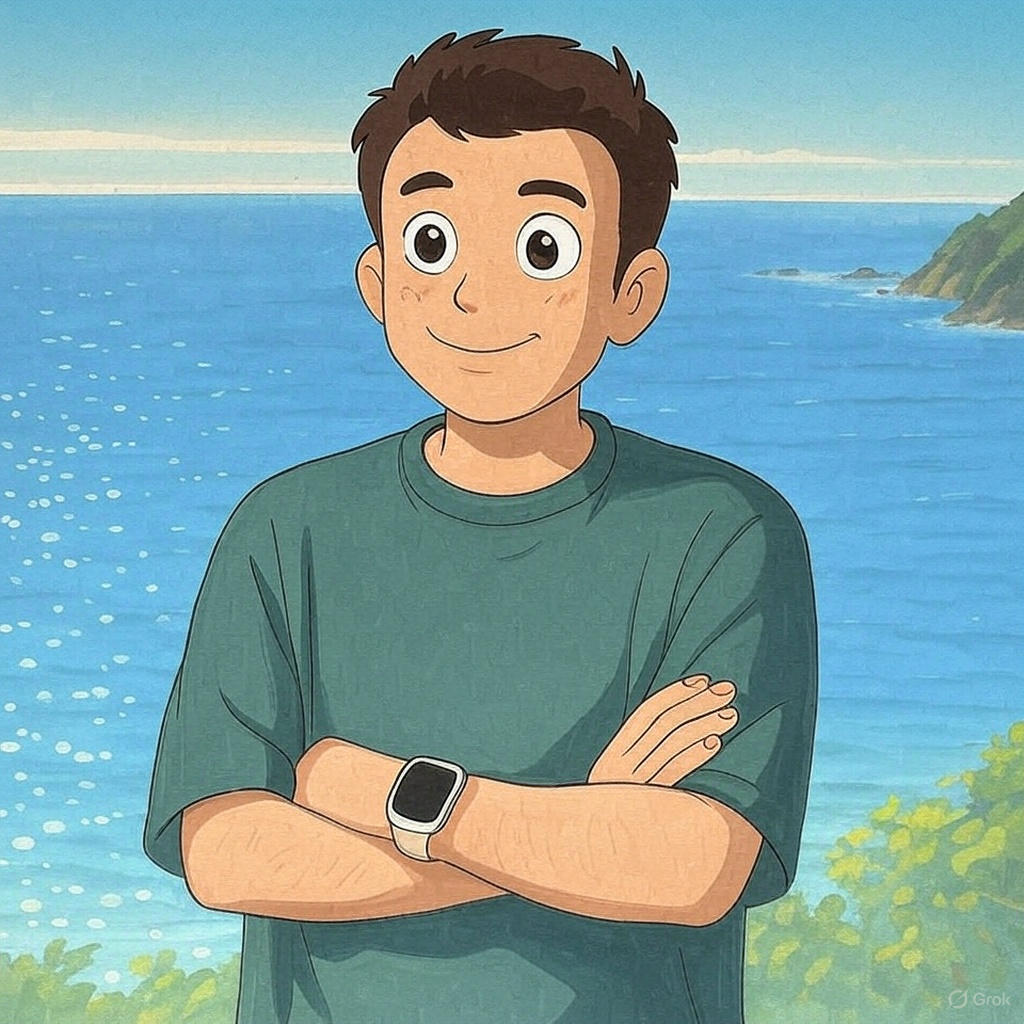

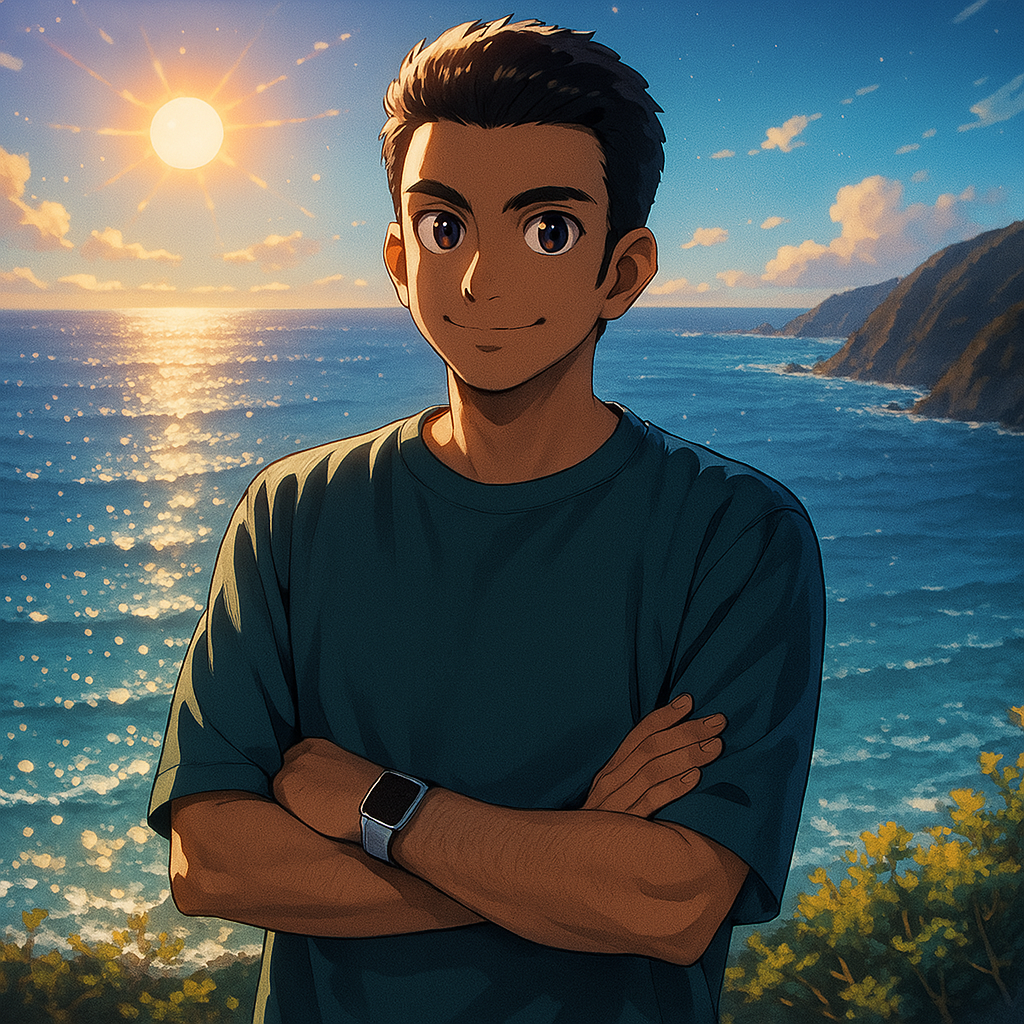

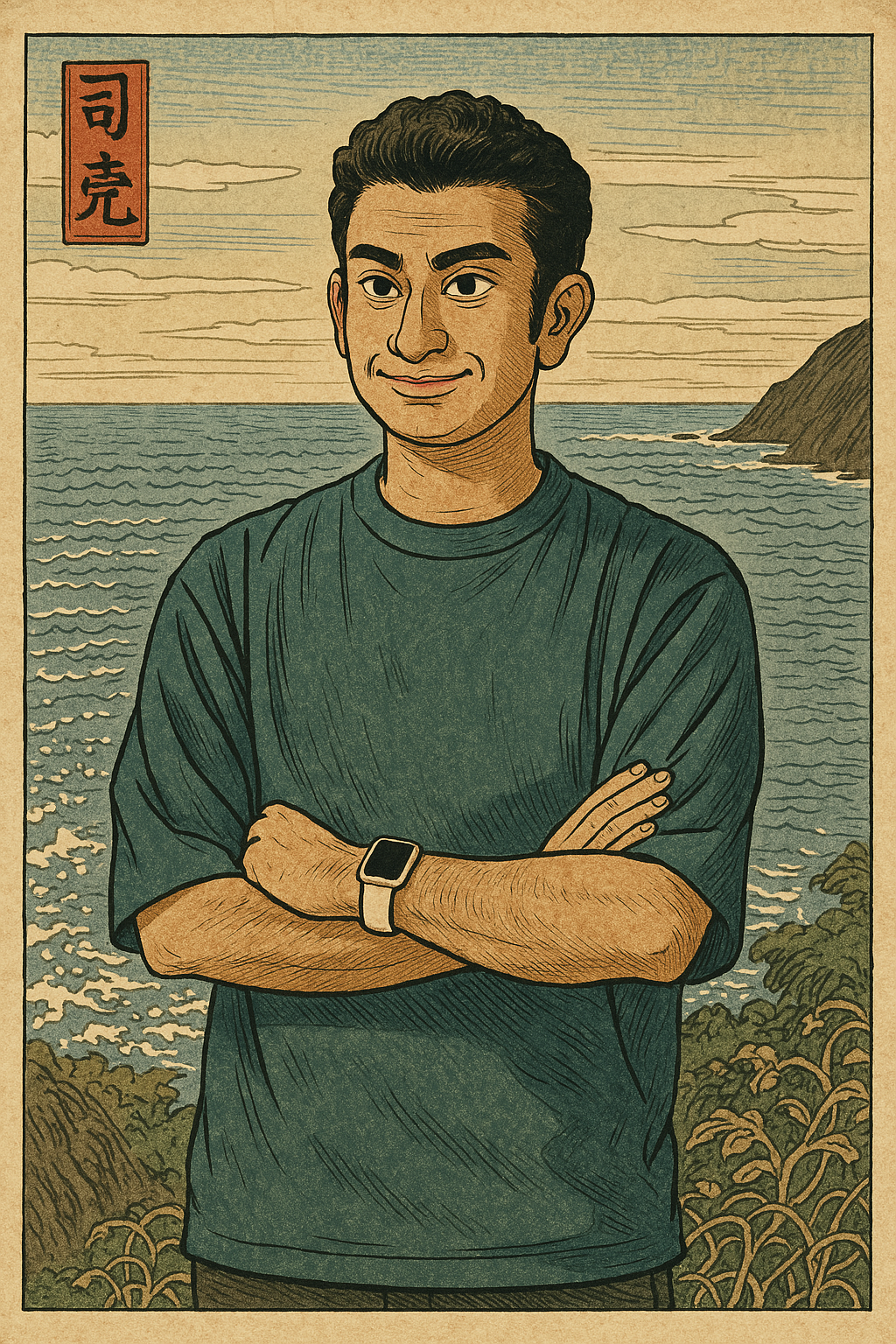

I compare the quality of the various multimodal models such as OpenAI’s GPT-4o, XAI’s Grok 3, and Google’s Gemini 2.0 Pro Experimental by testing them on the same input image and prompt. Following up on the Studio Ghibli trend, the prompt was “Create image - convert this to Studio Ghibli style”. The final image generated by each model is shown here for comparison. We can see that both GPT-4o and Grok 3 generate pretty reasonable and visually appealing results. The Gemini model, on the other hand, does not capture the intent of the prompt as well as the other two models. When running further tests on images with more people, GPT-4o edges out Grok 3 in a bunch of cases, but both these models are roughly on par with each other.

GPT-4o is also great at generating images in the style of other artists, animation studios, and art movements. For instance, it is capable of generating art in the form of Ukiyo-e, Art Deco, Pixar, Minecraft, Ufotable, and more. It is also capable of arbitrarily changing the background or context of an image, as shown in the comparison slider on the bottom right. In this example, I have a “ghiblified” image of myself and a group of friends in Crater Lake, and asked the model to change the background to Sequoia National Park by giving it the following prompt: “Create image - convert this to Studio Ghibli but replace the background with Sequoia National Park”. The prompts for changing backgrounds doesn’t work well when operating on the original image directly. Modifying the prompt slighly to “Create image - replace the background with Sequoia National Park” results in a spectacular failure. These models are only going to get better with time, and it does raise some concerns about what is real and what is not. But matter of fact is that this technology is here to stay, and there is no choice but to adapt to it.

The failure output isn’t shown here, out of respect for my friends’ privacy.

Is it a fad or a big deal?

The Studio Ghibli trend went viral on social media, and it was akin to the original ChatGPT moment, albeit on a much smaller scale. Just like any viral trend on social media, this too will eventually fade away. However, unlike other highly hyped trends in the Generative AI space, such as video generation models like Pika AI, Autonomous agents like Devin AI, Manus etc., this trend seems to be more secular. According to Sam Altman, Native image generation has already resulted in OpenAI gaining over 1 million users in an hour and overloaded their servers resulting in them imposing temporary rate limits on free as well as paid users.

Real world use-cases of this technology

Right now, the biggest use case for these models seems to be simply generating cute/fun images in different styles. And even this simple use case will result in the release of new consumer-facing applications and tools. However, this technology also opens up several other interesting possibilities, especially in the virtual and augmented reality space, as well as in the realm of user interfaces.

Generative Reality

As the ability of these models improve over time, and the latency of generating these images improves, there is a future where these models can be used to generate images in real-time, and even alter the notion of reality around us. This is what I call “Generative Reality”, a new made-up term. The term “Generative Reality” (GR) hasn’t been widely explored in academic literature, but it has appeared in various forms throughout science fiction shows like Pantheon (must watch for science fiction fans), and books like Ready Player One, and Snow Crash. Most of these books/shows, however, focus on the concept of Virtual Reality (VR) and “Generative Reality” is slightly different. Unlike Augmented Reality which overlays information on top of the real world, GR alters the way we perceive the world by generating new images in real-time via style transfer while keeping the generated images grounded in reality. For example, a Apple Vision Pro like headset can potentially replace the “real world” with a Studio Ghibli style world, as shown below. The existence of native image generation models like GPT-4o and Grok 3 make this a real possibility at some point in the future.

This article goes over a more dystopian example of using VR headsets to show a “unique summer field simulation program” to Dairy cows to improve yields. A slightly less dystopian (still dystopian) example is to use GR to “solve” the Daylight Savings Time problem.

Dystopian Nightmare: A solution to switching the clocks

The debate around Universal standard time and Daylight Savings Time resurfaces during the first week of November and March every year, when the clocks are set back or forward. Proponents of Universal Time argue it aligns with natural circadian rhythms, while Daylight Savings advocates favor extended evening daylight for leisure. Here’s a tongue-in-cheek (and dystopian) idea: instead of changing clocks, Generative Reality could adjust how the world looks based on personal preference. Prefer Daylight Savings? Your headset could render the world as if it’s an hour ahead.

Generative UI

A much more practical use case for native image generation is “Generative UI” — using generative models to create adaptive, context-aware user interfaces.

Generative UI can be used to create personalized user interfaces that adapt to the user’s needs and preferences in real-time. For example, if a user is booking a business trip via Airbnb, the generative UI can automatically adjust the layout, to focus more on commute time to the downtown, or the speed of Wi-Fi at the hotel. On the other hand, family vacation booking might look more like existing Airbnb interfaces, with greater emphasis on family-friendly amenities, safety features, and cost comparisons as shown below. Unlike “Generative Reality”, this is not a pipe dream, and is already supported to a certain extent by tools like Vercel’s AI SDK.

What’s Next?

In terms of immediate industry headwinds, companies like Adobe, Figma, and stock photo platforms like Shutterstock and Unsplash are likely to be affected the most by the rise of native image generation models.

-

Adobe and Figma are obviously affected the most, as their tools are heavily used for graphic design, UI/UX design, mockups, and image editing. Even if the current models cannot fully replace the need for professional design tools completely, they will only get better at instruction following over time, and will replace the need for these tools amongst the more casual users and hobbyists.

-

The other companies that face significant negative headwinds are stock photo hosting and sharing platforms like Shutterstock and Unsplash. As image generation keeps improving, the need for licensing stock photos will continue to diminish, and the role of these companies will be “diminished” to that of licensing images for training these models. However, the timeline of this happening is not clear, since other Image generation models like Midjourney and Stable Diffusion have been around for a while, and have not significantly disrupted this market yet.

-

There’s also potentially positive headwinds for AR/VR companies like Meta, Apple, Samsung, and more, since these models can help with creating newer User interfaces that are likely to make the AR/VR experience more immersive and personalized.

-

Social media companies like Meta, Snapchat, and TikTok could also integrate these tools into their platforms to allow users to add more interactive filters to their stories and posts, increasing user engagement and retention.

World Modeling using 3D Data and Video

As far as the core LLM technology is concerned, the logical next step would be to train these multi-modal models on even larger datasets, and even more modalities like video, and 3D models. Companies like World Labs are already working on 3D world generation using LLMs / Foundation Models, and future frontier models like Gemini-3.0, Grok 4, GPT-6 and more will likely have support for autoregressive video and 3D world generation as well. The priors for this happening are quite high, due to the existence of several large datasets of videos and 3D models available on the internet:

- Video datasets: All the content in YouTube, TikTok, movies, and more

- 3D datasets: Data from varioues sources like Lidar scans from autonomous vehicles, 3D assets in game engines like Unreal Engine, Unity, and Blender, and spatial reconstruction data from NeRFs (Neural Radiance Fields) deployed in smartphone cameras and other devices.