Putting 2025 in Perspective - State of the Tech/LLM Industry

It’s 2025 now, so happy new year everyone! 🎉. This blog post arrives a few days later than it’s supposed to, because, guess what, I am a master procrastinator. But hey, better late than never, right? 😅 This is going to be the first of many posts that I aim to write as part of my personal blog called “Essential Matrix”. I will primarily focus on writing about the Tech or Tech adjacent industry, with random musings and ramblings interspersed in between.

Anyways, enough about my blog, and back to the main theme of this post - A short look back into 2024, cool facts about the year 2025 special, and putting 2025 in perspective with respect to historical events of note. Starting with some random facts:

-

2025is a perfect square, as it can be expressed as \(45^2\). For most people born in the late 20th or early 21st century, this will most likely be the only perfect square year they will witness in their lifetime. Unless, you are Bryan Johnson, or there is a major breakthrough in longevity research. The next perfect square year will be \(46^2 = 2116\), which is \(91\) years away. The difference between any two perfect square years is continuously increasing, and can be expressed as \(2n + 1\), where \(n\) is the perfect square root of the year. Here’s some quick maths for it:

- Continuing with more maths:

-

2025also heralds the beginning of a new generation: Generation Beta. Definitely, not the best name for a generation, given the negative connotations, but let’s not digress into that.

Things that remained the same in 2024

- For starters, One Piece is still running; Winds of Winter is still not out; and GTA 6 is yet to be released. George R. R. Martin is the most prolific procrastinator of them all 😅.

- Adding to the list of things that didn’t happen:

- Flying cars are still not a thing, and Peter Thiel’s quote about “We wanted flying cars, instead we got 140 characters” still holds true.

- Nuclear fusion is still only a decade away from solving all of humanity’s energy problems. The first test for ITER is supposedly in 2039.

- Quantum computing is also still not a thing - although Google’s Willow announcement did demonstrate significant breakthroughs in the field.

- Sutton’s bitter lesson still continues to hold true, and the frontier models continue to improve significantly. Claude Sonnet 3.5 (New), aka Sonnet 3.6, is a major part of my daily workflow. Competition in this space is heating up, with new models constantly dethroning the previous ones in LM Arena. Personally, I am most excited by the Deepseek V3 announcement, and can’t wait to try their 671 Billion parameter model out.

- LLM providers working on frontier models continue to be really bad at naming their models. The names of the models just don’t make any sense: It just makes more sense to rename “Claude Sonnet 3.5 (New)” to “Claude Sonnet 3.6” and “Gemini 2.0 Flash Thinking” to something simpler like “Gemini Reasoning 1. 0”. OpenAI’s “o1” and “o3” naming conventions are confusing as well - is “GPT 4o” supposed to be the “better” model or “GPT o1”. Someone not in tune with the latest developments in the field would be completely lost.

Things that I didn’t expect in 2024

- Apple finally announced support for RCS, and enabled it from iOS 18.1 onwards.

- Continuing on the Apple theme, they also finally released an iPhone with a USB-C port, and upgraded the base memory in their Mac lineup to 16GB.

- Python

3.13was finally released, and it makes the Global Interpreter Lock (GIL) optional.- For anyone unaware about the story of GIL and Python, it’s simply a mutex that protects access to Python objects, and allows only one thread to hold the control of the Python interpreter. This makes scaling true multi-threaded Python applications is hard.

- This PEP describes the issue in detail, and honestly, does a way better job explaining it than I ever could.

- The scaling laws for LLMs don’t seem to be holding as well as expected, and a lot of research focus is towards scaling test time compute for models like OpenAI’s o1, o3, and Deepmind’s Gemini 2.0 Flash Thinking.

What the future beholds

The world changes quickly, and it seems like the pace of change is only increasing with time. To put things into perspective, in 1989, the Internet as we know today was not a thing, there were no smartphones, and most of the biggest tech companies of today like Google, Amazon, Facebook, and Netflix, were not even founded. Software had not yet eaten the world, and this was clearly visible when looking at a plot of the top 5 companies by market cap in 1989:

There’s only one American company in the top 5, and the rest all were Japanese. The dominant industries were Banking, Energy, and Automobiles, and the Tech industry was still in its nascent stages. The only American tech company in the top 10 was IBM. Industrial Bank of Japan was the most valuable with a market cap of $104.3 Billion dollars, but that dwarfs in comparison to Apple’s gross income of roughly $180 Billion in 2024. The dramatic shift in the markets is best understood by looking at the same plot as of 2024:

In 2025, the top 5 companies by market cap are all tech companies, and three out of the top five were founded after 1989. All the top five companies are American, and only one Japanese company still remains in the top 50 - Toyota. Tech industry is absolutely dominant, and the dominance of the American Big Tech companies is staggering - only four companies in the top 20 are not American - Saudi Aramco, TSMC, Eli Lilly, and Tencent. A lot of this dominance of American big tech companies can be attributed to the zero marginal cost of software, and the network and aggregation effects that accumulate over time. However, this zero marginal cost of software might not continue to hold in the future, especially with the rise of LLMs and new reasoning models like o1, o3, and Gemini 2.0 Flash Thinking that use compute during test/inference time as well. If we are to believe Sam Altman, the ChatGPT Pro subscription which costs $200 per month, is still continuing to lose money for OpenAI.

How it all ties back to the present and the near future

The reason I bring up the launch of the iPhone as a reference point is that it would have been possible for someone in 2007 to predict the dominance of the tech giants in 2025, even if no one could have predicted the exact set of companies that would dominate. In fact, an astute observer might have predicted that companies like Google, Amazon, Facebook, and Apple would be the top companies by market cap in 2025, and they would have been right mostly.

The public release of the first transformer model in 2017 via the “Attention is all you need” paper, is most likely a similar seminal moment in the tech industry. 2017 was only eight years ago, but we can already see the ripple effects of the transformer model in the tech industry. Nvidia’s market cap in 2015 was roughly $17 Billion, and it was not even in the top 100 companies by market cap. Now, in 2025, Nvidia is the second/third most valuable company in the world, depending on the day. A good question to ask is whether someone in 2025 can make reasonable predictions about the top companies by market cap in 2033, exactly eight years from now. For posterity’s sake, here are my predictions:

-

Nvidia- no surprises here, Nvidia’s GPUs are fundamental to all parts of the LLM ecosystem, and it’s hard to see them lose ground in the training/inference compute market given their CUDA ecosystem and moat. -

Google- another no-brainer, given Google’s dominance in the search market, their incredible AI research team, access to vast amounts of compute and specialized hardware like TPUs, and access to sources of data that no other company has, like YouTube videos, Search history, and Maps/Navigational data. A lot of benefits of foundation models will also accrue to the aggregators and Google would be the biggest beneficiary given their ownership of Google Search, Android, Chrome, and YouTube. -

Apple- while Apple is not in the forefront of the AI/LLM space, they will still be one of the biggest beneficiaries of the rise of LLMs simply due to their ownership of the App Store, and iOS ecosystem. Any time someone subscribes to a ChatGPT Pro, or Google’s new Gemini models, Apple gets a cut of the subscription revenue. Additionally, Apple can always use the frontier models from OpenAI, Google to distill knowledge and train their own smaller models for on-device inference, and off-load any heavy lifting to these LLM providers. Thus, Apple’s current AI strategy seems to make a lot of sense financially speaking, with the big risk being that LLMs may fundamentally change the way software is written and consumed, leaving Apple behind, just like Blackberry was left behind when the iPhone was launched. -

Microsoft/OpenAI- again, not much needs to be said here. OpenAI has continuously been at the forefront of pushing frontier models, and Microsoft just like Google, Meta, and Apple, will be a big beneficiary of the rise of LLMs due to their position as an aggregator. Microsoft is fully committed to investing in this space, and aims to invest over $80 Billion dollars on building data centers. This number is mind-boggling, but makes sense if you consider that Microsoft does not want to miss on the next computing paradigm shift, given their abysmal record in the mobile domain. -

Meta- they have a great research team, and also have access to vast amounts of data from Facebook, Instagram, and WhatsApp, which is simply not available to any other company. Additionally, as an aggregator, they benefit from the network effects of the platform, and the dominance of Generative AI models for image and video generation would be hugely beneficial for their advertising business.

Other than these five companies, the other notable runners-up would be Amazon (AWS duh), SpaceX (if they go public), Eli Lilly (Mounjaro and other GLP-1 inhibitors are doing really well). The interesting thing to note about my predictions, which can obviously be wrong, is that it assumes that no new competitors will emerge in the next eight years. There are two main reasons for my predictions:

- While the zero marginal cost of software allowed several companies to emerge and dominate the tech industry, and leapfrog the incumbents, the LLM/AI paradigm shift is fundamentally different. As Doug O’Laughlin put recently in his blog post marginal costs are coming for tech companies, and the death of ZIRP (Zero Interest Rate Phenomenon) means that the incumbents are the most well-equipped to handle the rising costs of compute.

- Additionally, the emergence of newer models like Deepseek V3, seems to indicate that frontier LLMs might soon be a commodity, and the real value would be in the data and the underlying network effects of the platform. This is where the aggregators like Google, Meta, Apple, and Microsoft have the biggest moat, and it’s hard to see any new entrant breaking into these respective moats/de-facto monopolies.

One major source of risk that these predictions do not account for is all sorts of regulatory risks that these companies face, especially in the antitrust domain. However, barring any major regulatory changes, or AT&T style breakups, this should not have a major impact on the dominance of these companies.

Zooming out

While making predictions about the future is fun, it’s important to zoom out and realize that the future is inherently unpredictable. Roy Amara, famously stated the following quote, also known as Amara’s Law:

We tend to overestimate the effect of a technology in the short run and

underestimate the effect in the long run.

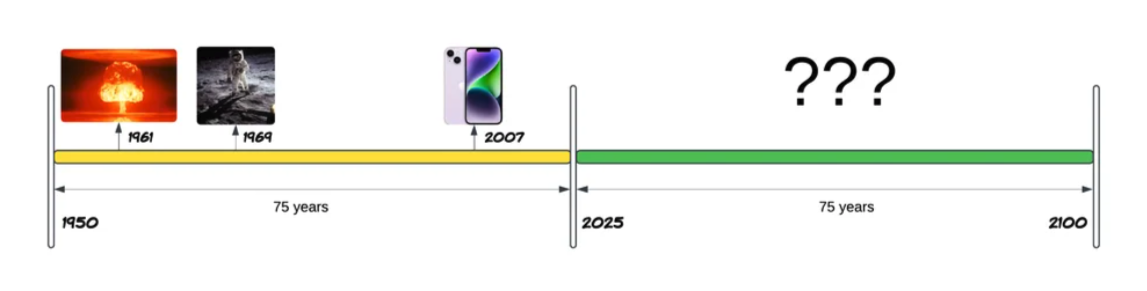

In essence, even if the progress in the next eight years is not as revolutionary as the last eight years (which is highly unlikely), the cumulative effect of the progress in various domains will continue to compound, and the future will be vastly different from what we can imagine today. For instance, the year 2025 is closer to the year 2100 than it is to the year 1950. The illustration below shows some of the major technological breakthroughs that happen in the last 75 years, primarily in the bits and atoms domain.

Specifically, humanity mastered quantum mechanics, resulting in the development of the Tsar Bomba, the most powerful nuclear weapon ever detonated. Humans also designed the first transistor, landed on the moon, and developed the internet in this time frame. And finally, the other big breakthrough was the launch of the iPhone, which fundamentally changed the way most people interact with technology. The next 75 years are likely to have just as many, if not more, breakthroughs: mastering fusion, figuring out quantum computing, colonizing Mars, developing AGI, and maybe even curing cancer and aging.

That’s all for this post - see you in the next one! 🚀